Pin

Pin Photo courtesy of Freepik

Synopsis: Your brain runs on just 12 watts of power, about what a dim desk lamp needs to glow. Yet this modest biological marvel thinks, dreams, remembers your childhood, and keeps your heart beating, all while machines trying to match such feats burn through 2.7 billion watts. That’s 225 million times more electricity for the same work. Evolution spent millions of years perfecting this efficient design with 86 billion neurons. Despite all our technological swagger, nature still writes the best engineering manual. Scientists now race to copy it.

Here’s something that ought to make you sit up straight in your chair. While you’re reading these words, solving puzzles, remembering what you had for breakfast, and keeping your lungs inflating on schedule, your brain is sipping electricity like a miser nursing weak tea. The whole operation runs on a mere 12 watts, which is less power than most people use to charge their phones overnight.

Now, you might think that’s a pittance until you consider what you’re getting for that investment. Your brain houses roughly 86 billion neurons, each one a tiny processing unit connected to thousands of others through intricate webs called synapses. These connections number in the hundreds of trillions, forming a network so complex that scientists are still scratching their heads trying to map it completely.

The truly amusing part comes when you compare this efficiency to our supposedly advanced artificial intelligence systems. When tech companies build machines to mimic even a fraction of what your brain does naturally, they need data centers the size of warehouses, cooling systems that could refrigerate a small town, and enough electricity to power thousands of homes. The difference isn’t just notable—it’s laughable in the way nature often makes our best efforts look like amateur hour.

Table of Contents

What Makes a Brain Tick on Pocket Change

Pin

Pin Photo courtesy of Freepik

The secret to your brain’s thriftiness lies in how it’s built from the ground up. Unlike computers that separate memory storage from processing, your neurons do both jobs simultaneously in the same place. Each connection between brain cells stores information while also performing calculations, which eliminates the energy waste of shuttling data back and forth like a confused mail carrier.

Your neurons also communicate using a clever trick called sparse coding, which means most of them stay quiet most of the time. Only the cells that need to fire actually use energy, while the rest sit idle, conserving resources like sensible farmers during a drought. This stands in stark contrast to computer chips, where electrons flow constantly through circuits whether they’re needed or not, burning power like a city that forgot to turn off its streetlights.

Then there’s the matter of how signals travel through your brain. Neurons use both electrical charges and chemical messengers to pass information along, a dual system that’s remarkably energy efficient. The chemical part, involving molecules called neurotransmitters, allows your brain to amplify or dampen signals naturally without needing extra power supplies. It’s biological engineering at its finest, refined through countless generations of trial and error.

The Energy Glutton Called Artificial Intelligence

Pin

Pin Photo courtesy of Freepik

Modern AI systems, for all their impressive capabilities, have an appetite that would embarrass a gold rush town saloon. Training a large language model—the kind that can write essays or answer questions—requires somewhere in the neighborhood of 2.7 billion watts. That’s not a typo. We’re talking about enough electricity to keep a quarter million American homes running for an entire year.

The problem starts with the hardware itself. AI relies on graphics processing units, or GPUs, which were originally designed to render video game graphics at lightning speed. These chips are remarkably good at the parallel processing AI needs, but they’re about as energy efficient as heating your house by leaving the oven door open. Each GPU can draw several hundred watts, and a serious AI training operation might use thousands of them running simultaneously.

But the GPUs are only part of the story. All that computation generates heat—tremendous amounts of it—which means you need industrial cooling systems running around the clock to prevent your expensive equipment from melting into expensive slag. Add in the servers, networking equipment, and the massive infrastructure needed to feed data to these hungry machines, and you’ve got an energy bill that would make even a wealthy industrialist wince. The whole arrangement makes your brain’s 12-watt operation look like the shrewdest bargain since buying Manhattan for beads.

Evolution's Long Game Versus Silicon Valley's Sprint

Your brain didn’t get this efficient overnight, or even in a million overnights. Evolution has been tinkering with neural systems for roughly 500 million years, ever since the first simple creatures developed clusters of nerve cells to coordinate their movements. That’s half a billion years of testing, failing, adapting, and improving—a research and development timeline that makes even the oldest technology companies look like impatient toddlers.

During this extended trial period, nature ruthlessly eliminated wasteful designs. Any creature whose brain consumed too much energy was at a survival disadvantage, likely to be outcompeted by more efficient neighbors who could get by on less food. The result is that every aspect of your brain’s architecture has been pressure-tested for energy efficiency across countless generations, in environments ranging from tropical jungles to arctic tundra.

Computer engineers, by comparison, have been seriously working on AI for maybe seventy years, and modern deep learning is barely a teenager. They’re trying to recreate in decades what took nature eons to perfect, and they’re doing it without the benefit of natural selection’s brutal editing process. It’s rather like expecting a apprentice carpenter to build a cathedral in a weekend using only the tools he invented that morning—ambitious, certainly, but perhaps a touch optimistic about the timeline.

The Architecture of Brilliance

Inside your skull sits a three-pound universe of staggering complexity. Those 86 billion neurons aren’t just randomly scattered about like seeds thrown on hard ground. They’re organized into distinct regions, each specialized for different tasks, yet all working together in harmonious coordination. Your visual cortex processes what you see, your motor cortex controls movement, your hippocampus files away memories, and dozens of other areas handle everything from language to emotion to balance.

What makes this arrangement so efficient is that information doesn’t have to travel far to get where it needs to go. Related functions sit near each other, reducing the energy cost of communication. It’s like designing a factory floor where the assembly line flows logically from one station to the next, rather than requiring workers to run across the building for every step. This spatial organization alone saves enormous amounts of power compared to computer systems where data might ping-pong across different continents through fiber optic cables.

The connections between neurons also display remarkable intelligence in their structure. Frequently used pathways get strengthened through a process called long-term potentiation, making those routes faster and more efficient over time. It’s self-optimizing hardware that gets better at the tasks you practice most often, without requiring a software update or a visit from the IT department. Meanwhile, unused connections get pruned away, eliminating waste and keeping the system lean. No computer yet designed can match this elegant self-improvement scheme.

Why AI Needs a Football Field When Your Brain Needs a Walnut

The physical space requirements tell their own amusing story. Your entire brain fits comfortably inside your skull with room to spare for protective fluid and cushioning. It weighs about as much as a bag of flour and occupies less volume than a large grapefruit. Despite this compact packaging, it handles every aspect of keeping you alive and functioning, from regulating your heartbeat to composing symphonies, should you be musically inclined.

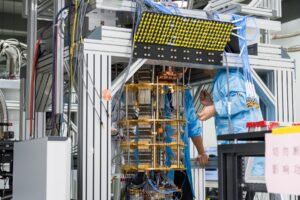

An AI system with comparable capabilities, on the other hand, needs a data center that might occupy thousands of square feet. Rows upon rows of server racks filled with specialized processors stretch as far as the eye can see, each one humming away and generating enough heat to roast a decent sized pig. The cooling systems alone often take up as much space as the computing equipment, with massive air conditioning units working overtime to prevent thermal catastrophe.

This size difference matters for more than just real estate costs. The farther electrical signals have to travel, the more energy gets wasted as heat along the way. Your brain’s compact design means signals only need to travel inches at most, usually just tiny fractions of an inch. AI systems, with components spread across building-sized facilities, lose tremendous amounts of energy simply moving information from point A to point B. It’s the difference between shouting across a room versus trying to have a conversation between mountaintops—one takes considerably more effort than the other.

The Learning Curve Nobody Expected

When you learned to ride a bicycle, you probably fell down a few times, wobbled uncertainly for a while, and then one day found yourself pedaling confidently down the street. Your brain accomplished this feat by adjusting the strength of various neural connections, fine-tuning your balance and coordination through practice. The whole process required no additional power supply, no expensive equipment, and probably cost you the energy equivalent of a couple of sandwiches.

Teaching an AI system a new task follows a dramatically different script. Engineers must feed it enormous datasets, often millions or billions of examples, while the system slowly adjusts billions of internal parameters through a process called backpropagation. This training phase can take weeks or months of constant computation, burning through megawatt-hours of electricity like a spendthrift heir burning through a fortune. The contrast between biological and artificial learning couldn’t be more stark.

Your brain also has a neat trick called transfer learning, though it does this naturally without needing the fancy name. Skills and knowledge from one domain help you learn related things faster. Once you can ride a bicycle, learning to ride a motorcycle comes easier. Your brain automatically applies relevant patterns and fills in gaps intelligently. Modern AI systems can do something similar, but it requires careful engineering and still lacks the fluid, intuitive quality of human learning. We pick things up; they have to be taught with industrial thoroughness.

The Temperature Problem Nobody Talks About

Your brain operates within a remarkably narrow temperature range, roughly the same temperature as the rest of your body, give or take a degree. It manages this despite conducting trillions of operations per second, all without overheating or requiring external cooling fans. The trick lies partly in using chemical reactions that don’t generate much waste heat, and partly in being efficient enough that there isn’t much excess heat to worry about in the first place.

Computer processors, by contrast, get hot enough to fry an egg if you removed their cooling systems, and some high-performance chips would damage themselves in seconds without active temperature management. Data centers running AI workloads must maintain strict climate control, often keeping rooms at temperatures cool enough to make employees wear jackets indoors. The electricity used for cooling often matches or exceeds the power used for computation itself, effectively doubling the energy bill.

This heat problem limits how dense we can pack computer components. Squeeze them too close together, and the heat becomes unmanageable, even with the best cooling systems money can buy. Your brain, meanwhile, has no such concerns. Its neurons sit cheek by jowl in dense layers, connected by fibers that interweave like a fantastically complex tapestry, all without generating problematic heat. It’s operating at a density and efficiency level that would make chip designers weep with envy, if only they could figure out how to replicate it with silicon.

The Real Cost of Silicon Thinking

When we talk about AI’s energy consumption, we’re not just discussing theoretical numbers or abstract comparisons. Real data centers consume real electricity from real power grids, electricity that has to be generated somewhere, often by burning fossil fuels. A single training run for a large AI model can produce carbon emissions equivalent to what five cars generate in their entire lifetimes. That’s before you even deploy the thing and let people use it.

The financial costs follow naturally from the energy costs. Tech companies spend hundreds of millions of dollars building data centers and even more keeping them running. Microsoft, Google, and other AI leaders are making deals with nuclear power plants and exploring dedicated energy sources just to keep their machines fed. We’ve reached a point where the limiting factor in AI development isn’t always the algorithms or the data—it’s increasingly the raw electrical power available to train and run these systems.

Meanwhile, your brain handles its business on the equivalent of a single banana’s worth of calories per day. You can power a full day of thinking, learning, creating, and problem-solving with less energy than it takes to toast a piece of bread. No special power plants required, no cooling towers, no backup generators. Nature solved the efficiency problem so thoroughly that we barely notice it, except when we try building artificial alternatives and discover just how good we had it.

Neuromorphic Computing Takes Notes from Nature

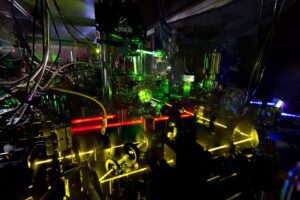

Scientists and engineers, being neither blind nor foolish, have noticed this rather substantial efficiency gap. This observation has sparked a fascinating field called neuromorphic computing, which essentially means building computer chips that work more like brains than like traditional processors. Instead of the conventional architecture where calculations happen in one place and memory lives in another, neuromorphic chips combine both functions in the same components, just like neurons do.

These brain-inspired chips use artificial neurons and synapses that communicate through electrical spikes, mimicking the way biological neurons fire. Early results show promise that’s almost startling. Some neuromorphic systems can perform certain tasks using a thousand times less power than conventional computers, bringing them much closer to the brain’s efficiency range. They’re particularly good at pattern recognition and sensory processing, the kinds of things your brain excels at naturally.

Companies and research labs worldwide are now racing to develop better neuromorphic hardware. Intel has its Loihi chip, IBM developed TrueNorth, and academic institutions are exploring dozens of different approaches. The goal is audacious: to capture even a fraction of the brain’s power efficiency in artificial form. If they succeed, it could revolutionize everything from smartphones to robotics, making devices that can think intelligently without needing to be plugged into wall sockets every few hours. We’re essentially trying to learn from four hundred million years of biological homework, and paying attention might finally be bringing dividends.

The Frontier Where Biology Meets Silicon

We stand at a curious junction in technological history. For decades, computers got faster through sheer brute force—smaller transistors, higher clock speeds, more cores, more everything. That approach is running into hard physical limits, the same laws of thermodynamics that your brain worked around millions of years ago. The silicon industry now faces a choice: keep pushing against those walls, or start building different kinds of walls entirely.

The emerging consensus suggests that future computing will likely blend biological principles with engineered precision. We might see chips that use optical signals like light beams alongside electrical currents, mimicking how neurons use both electrical and chemical communication. We might develop systems that sleep and consolidate memories like brains do, saving enormous amounts of energy during downtime. The possibilities open up considerably once you stop trying to build faster versions of the same old designs and start asking what nature might teach.

What’s certain is that your brain will remain the gold standard for efficient intelligence for quite some time yet. It performs miracles of computation on a power budget that wouldn’t keep a laptop awake through lunch. Every thought you think, every memory you form, every decision you make happens inside the most sophisticated energy-efficient computer ever developed. And while we’re getting better at building artificial alternatives, nature still holds most of the patents worth copying. The next revolution in computing won’t come from making machines faster—it’ll come from making them smarter about using the power they’ve got, just like your remarkable brain already does.

FAQs

Current neuromorphic chips use 1,000x less power than traditional computers for some tasks, but they’re still far from matching the brain’s 12-watt miracle. We’re closing the gap, though—it’s the most promising path forward in computing.

We’re already hitting limits. Some tech companies are buying entire power plants. The environmental and financial costs are becoming unsustainable, forcing the industry to find smarter, not just bigger, solutions.

Yes! Your brain uses about 12 watts continuously, roughly what a small LED bulb draws. That powers 86 billion neurons performing trillions of calculations every second—nature’s best efficiency trick yet.

Not with current technology. But neuromorphic computing, quantum processors, and bio-hybrid systems might eventually get close. We’re essentially trying to reverse-engineer millions of years of evolution in a few decades.

Training is the energy monster, using billions of watts over weeks. Running a trained AI uses far less but still vastly more than your brain—a single query to a large AI model can use as much power as charging your phone.