Pin

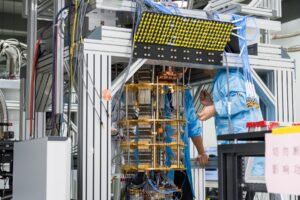

Pin New Quantum Processor / Courtesy of Princeton University

Synopsis: Princeton University researchers have developed a superconducting qubit that maintains quantum information for 1.68 milliseconds—three times longer than any lab result and 15 times longer than qubits used by Google and IBM. The breakthrough relies on tantalum circuits fabricated on high-purity silicon substrates, addressing the decoherence problem that has plagued quantum computing for years. This achievement could accelerate practical quantum computing applications, with researchers suggesting that swapping these qubits into Google’s Willow processor would improve performance by 1,000 times, potentially bringing scientifically relevant quantum computers within reach by 2030.

Quantum computing has been stuck in a frustrating loop for years. The technology promises to revolutionize everything from drug discovery to climate modeling, but there’s been one massive problem: the quantum bits that power these machines lose their information almost instantly.

Researchers at Princeton University just achieved coherence times of up to 1.68 milliseconds with their new kind of quantum processor—roughly three times longer than the best laboratory results and 15 times longer than the superconducting qubits deployed in commercial quantum processors by tech giants like Google and IBM. That might sound like a tiny increment, but in quantum computing, milliseconds matter enormously.

The Princeton team didn’t just tinker around the edges of existing technology. They went back to basics, swapping out traditional materials for tantalum circuits built on ultra-pure silicon. The result addresses what’s been quantum computing’s Achilles heel: qubits that fail before they can complete useful calculations. With this advance, researchers are now talking about practical quantum computers arriving by the end of the decade—not as a distant possibility, but as an achievable goal.

Table of Contents

Understanding the Coherence Problem

Pin

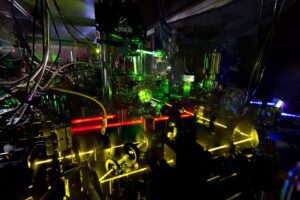

Pin Courtesy of Starworld Lab

The challenge with quantum computing boils down to something called coherence time. This refers to how long a qubit can maintain its quantum state before the environment around it causes that delicate state to collapse. Once coherence is lost, the information vanishes, and any calculation in progress becomes worthless.

Current quantum processors face this problem constantly. The qubits inside machines built by Google and IBM typically maintain coherence for just fractions of a millisecond. During that brief window, these processors need to perform all their calculations, apply error correction, and extract results. It’s like trying to solve a complex math problem while someone keeps erasing your work every few seconds.

This limitation has kept quantum computers confined largely to research labs and proof-of-concept demonstrations. Real-world problems often require longer, more complex calculations that today’s qubits simply can’t sustain. The coherence bottleneck has been the primary reason why quantum computing hasn’t yet delivered on its transformative promises.

The Tantalum and Silicon Solution

Pin

Pin Tantalum / Courtesy of Wikimedia Commons

The Princeton breakthrough centers on an unexpected combination of materials. The research team fabricated their qubits using tantalum circuits placed on substrates made from extremely pure silicon. This pairing proved far more effective at preserving quantum coherence than the aluminum-on-sapphire approach that has dominated the field for years.

Tantalum offers unique advantages as a superconducting material. It has fewer defects at the atomic level compared to aluminum, which means there are fewer places where quantum information can leak away. The high-purity silicon substrate adds another layer of protection by creating an exceptionally clean environment for the quantum operations to occur.

The results speak for themselves. Where existing commercial qubits might hold their quantum state for around 100 microseconds, these tantalum-on-silicon qubits maintained coherence for 1,680 microseconds. That’s not just an incremental improvement—it’s a fundamental shift in what becomes computationally possible when you have that much more time to work with quantum information.

What This Means for Error Correction

Quantum computers don’t just need long coherence times to perform calculations. They also need time to run error correction routines that catch and fix mistakes before they corrupt the final answer. Error correction is essential because qubits are inherently noisy and prone to errors.

The current approach requires using multiple physical qubits to create one reliable logical qubit through error correction. This overhead is enormous—you might need 1,000 physical qubits just to create one logical qubit that can be trusted to give accurate results. Much of the coherence time gets consumed by these error-checking procedures rather than actual computation.

With 15 times longer coherence, these new qubits could dramatically reduce the error correction burden. Calculations that currently require thousands of physical qubits might soon be achievable with far fewer. The Princeton team estimates that integrating their qubits into Google’s Willow processor could improve performance by a factor of 1,000, transforming what kinds of problems become solvable.

The Path to Practical Quantum Computing

Pin

Pin Courtesy of Starworld Lab

The quantum computing industry has long promised applications that could change medicine, finance, materials science, and artificial intelligence. Yet these promises have remained just over the horizon because the hardware wasn’t quite ready. Coherence times have been the limiting factor holding everything back.

With this breakthrough, the timeline for practical quantum computing suddenly looks more concrete. The researchers behind this work suggest that scientifically relevant quantum computers—machines that can solve problems with real commercial or research value—could arrive by 2030. That’s not a vague “someday” prediction but a specific target based on measurable progress.

The pharmaceutical industry stands to benefit particularly quickly. Drug discovery currently relies on trial and error because simulating molecular interactions is too computationally intensive for classical computers. Quantum computers with sufficient coherence could model these interactions accurately, potentially identifying promising drug candidates in days rather than years. Climate modeling, optimization problems in logistics, and breakthroughs in battery technology could follow closely behind.

How This Compares to Current Industry Leaders

Google and IBM have been racing to build the most capable quantum processors, each taking slightly different approaches. Google’s Willow processor, announced recently, demonstrated impressive error correction capabilities. IBM has been steadily scaling up the number of qubits in its machines, with systems now exceeding 1,000 qubits.

Both companies rely on superconducting qubits made primarily from aluminum circuits on sapphire substrates. These have been the industry standard for years because they’re relatively well-understood and manufacturable at scale. The trade-off has been accepting those shorter coherence times as simply the cost of doing business in quantum computing.

The Princeton approach doesn’t necessarily make Google or IBM’s work obsolete. Rather, it offers a potential upgrade path. The tantalum-on-silicon qubits could theoretically be integrated into existing quantum processor architectures. This compatibility means the tech giants could adopt the breakthrough without completely redesigning their systems, accelerating the path to improved performance across the industry.

The Science Behind Superconducting Qubits

Superconducting qubits work by exploiting a quantum property called superposition. While a classical computer bit must be either a zero or a one, a quantum bit can exist in both states simultaneously until measured. This allows quantum computers to explore multiple solutions to a problem at the same time, giving them exponential advantages for certain types of calculations.

The superconducting part comes from cooling these qubits to temperatures near absolute zero—colder than outer space. At these extreme temperatures, certain materials lose all electrical resistance and allow current to flow indefinitely. The qubits are essentially tiny circuits where electric current can exist in a superposition of flowing clockwise and counterclockwise simultaneously.

Maintaining this delicate quantum superposition requires isolating the qubits from environmental noise. Heat, electromagnetic radiation, and even cosmic rays can cause decoherence by essentially measuring the qubit unintentionally. The longer you can keep the qubit isolated and coherent, the more complex the quantum algorithm you can run before the quantum state collapses.

Manufacturing Challenges and Scalability

Creating qubits with extended coherence times in a laboratory setting is one achievement. Manufacturing them reliably at scale presents an entirely different set of challenges. The semiconductor industry has spent decades perfecting the fabrication of classical computer chips, but quantum processors demand even higher purity and precision.

The Princeton team’s reliance on high-purity silicon offers both opportunities and obstacles. Silicon fabrication is extremely mature technology—the entire computer industry runs on silicon chips. However, the purity requirements for quantum computing exceed what’s needed for conventional electronics. Even trace amounts of impurities can create defects that destroy quantum coherence.

The tantalum circuits add another layer of complexity. While tantalum is well-understood in other applications, using it for superconducting qubits at scale hasn’t been thoroughly proven. Companies will need to develop new fabrication processes, quality control methods, and testing procedures. The good news is that if these challenges can be overcome, the existing infrastructure of the semiconductor industry could be leveraged to produce quantum processors more efficiently.

Competition Beyond Superconducting Qubits

Superconducting qubits aren’t the only game in quantum computing. Several other approaches are competing for dominance, each with distinct advantages and limitations. Understanding this broader landscape helps contextualize what the Princeton breakthrough really means for the future of the field.

Trapped ion quantum computers, developed by companies like IonQ and Honeywell, already achieve impressive coherence times—sometimes measured in minutes rather than milliseconds. These systems trap individual atoms using electromagnetic fields and manipulate them with lasers. The downside is that trapped ion systems are harder to scale up and generally operate more slowly than superconducting qubits.

Photonic quantum computers represent another approach, using particles of light rather than superconducting circuits. Companies like PsiQuantum are betting on this technology because photonic systems can potentially operate at room temperature. However, creating the interactions between photons needed for quantum computation remains technically challenging. The race isn’t over, but this superconducting qubit breakthrough substantially strengthens one particular pathway forward.

Investment and Commercial Interest

The quantum computing sector has attracted billions in investment over the past few years, with both venture capital and government funding pouring into the field. The promise of quantum advantage—the point where quantum computers outperform classical computers at useful tasks—has kept investors interested despite the long development timeline.

This latest breakthrough could accelerate commercial interest significantly. When coherence times were measured in dozens of microseconds, the path to practical applications remained murky. Now that researchers have demonstrated millisecond-scale coherence, the business case for quantum computing becomes much clearer. Companies can start planning real applications rather than just experiments.

Major corporations beyond the tech giants are paying attention. Financial institutions want quantum computers for portfolio optimization and risk analysis. Aerospace companies are interested in materials simulation and aerodynamics. Energy companies see potential in optimizing power grids and designing better batteries. As coherence times improve and error rates drop, these applications move closer to reality, which in turn attracts more investment in a virtuous cycle.

Remaining Technical Hurdles

Extended coherence time solves one critical problem but doesn’t eliminate all obstacles to practical quantum computing. Scaling up remains a major challenge. The Princeton demonstration involved individual qubits, but useful quantum computers will need thousands or millions of qubits all maintaining coherence simultaneously while connected to each other.

Gate fidelity presents another hurdle. This refers to how accurately quantum operations can be performed on qubits. Even with perfect coherence, if the operations that manipulate the qubits introduce errors, the computation will fail. Current systems achieve gate fidelities around 99.9%, but error correction algorithms generally need 99.99% or better to work efficiently.

Connectivity between qubits matters too. In current architectures, each qubit can typically interact with only a few neighbors. Complex algorithms often require arbitrary qubits to interact with each other, necessitating sequences of operations that route information across the processor. These routing operations consume precious coherence time. Better coherence helps, but architectural improvements in how qubits connect will still be needed for the most demanding applications.

The Road to 2030 and Beyond

The researchers behind this breakthrough have drawn a line in the sand by suggesting 2030 as a target for scientifically relevant quantum computers. That gives the industry roughly six years to turn laboratory results into commercial products, which sounds ambitious but increasingly achievable given recent progress.

The next few years will likely see rapid iteration as companies race to integrate longer-coherence qubits into their systems. Google, IBM, and other quantum computing firms will need to evaluate whether adopting tantalum-on-silicon qubits makes sense for their architectures. Meanwhile, the scientific community will work to understand exactly why this material combination performs so well, potentially leading to even better alternatives.

By the end of the decade, quantum computing could finally transition from a research curiosity to a genuine industry. The first applications will likely be narrow and specialized—solving specific problems where quantum computers have clear advantages. But as the technology matures and coherence times continue improving, the range of tractable problems will expand. The breakthrough in coherence time isn’t the finish line, but it might be the clearest signal yet that we’re on the home stretch.

FAQs

Coherence time measures how long quantum information remains stable in a qubit before environmental interference causes it to degrade. Longer coherence allows more complex calculations.

Tantalum has fewer atomic-level defects than aluminum, providing a cleaner environment that preserves quantum states. Combined with high-purity silicon, it dramatically extends coherence time.

Not immediately. The breakthrough offers a potential upgrade path that could be integrated into existing architectures, improving performance without requiring complete redesigns of current systems.

Researchers suggest scientifically relevant quantum computers could arrive by 2030. Early applications will likely focus on drug discovery, materials science, and optimization problems.

Superconducting qubits operate at temperatures near absolute zero—around 0.015 Kelvin. This extreme cooling eliminates thermal noise that would otherwise destroy quantum coherence.