Pin

Pin Photo from Pexels

At first, it sounds like a weird question. ChatGPT is just text on a screen, right? But dig a little deeper, and things get intense. The sudden rise in popularity of AI tools—especially ChatGPT—has sparked a massive increase in data center activity. And these data centers don’t just use electricity. They also consume huge amounts of water. Not directly from your device, but through the backend infrastructure that powers all this AI magic.

So now people are starting to ask tough questions: What’s the environmental cost of asking a chatbot a question? And is there a point where these small, invisible costs actually start to matter? It’s the kind of stuff most of us never thought about. But once you do, you can’t unsee it. ChatGPT and other AI models might just be sipping water every time we type.

Table of Contents

How Much Water Does ChatGPT Actually Use?

When you chat with ChatGPT, it’s easy to forget there’s a massive infrastructure working behind the scenes. Researchers have found that training GPT-3, the model behind ChatGPT, consumed about 700,000 liters of water—that’s enough to produce approximately 370 BMW cars or 320 Tesla vehicles. But it’s not just the training phase; even regular usage adds up. For every 5 to 50 prompts you send, ChatGPT uses about 500 milliliters of water. This water is primarily used to cool the data centers that power the AI, preventing the servers from overheating. Considering the millions of users interacting with ChatGPT daily, the cumulative water consumption is staggering. It’s a hidden cost that isn’t immediately apparent but has significant environmental implications.

The Environmental Impact of AI's Water Consumption

The water consumption of AI data centers isn’t just a number—it’s a growing environmental concern. Data centers housing AI are globally expected to consume six times more water than the country of Denmark. By 2027, AI may use up to 6.6 billion cubic meters of water. This massive water usage contributes to the depletion of freshwater resources, especially in regions already facing water scarcity. Beyond water, these data centers are energy-intensive, leading to significant carbon emissions. For instance, data centers contribute approximately 0.3% to global carbon emissions. The combination of high water and energy consumption underscores the urgent need for sustainable practices in AI infrastructure. Without intervention, the environmental footprint of AI will continue to expand, exacerbating existing ecological challenges.

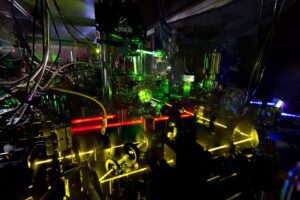

Why Is AI So Thirsty? Understanding the Water Demand

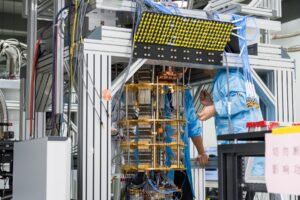

AI’s insatiable thirst for water stems primarily from the need to cool the high-performance servers that power these intelligent systems. As AI models become more complex, they require more computational power, leading to increased heat generation within data centers. To prevent overheating and ensure optimal performance, these servers rely heavily on cooling mechanisms, many of which utilize vast amounts of water. For instance, a small 1MW data center can consume up to 6.6 million gallons of water annually for cooling purposes. This significant water usage highlights the critical role water plays in maintaining the infrastructure that supports AI technologies. Moreover, the energy required to power these data centers often involves water-intensive processes, further amplifying AI’s overall water footprint. As AI continues to permeate various sectors, understanding and addressing its water consumption becomes imperative to ensure sustainable technological advancement.

Innovations Aimed at Reducing AI's Water Footprint

The tech industry is actively seeking solutions to mitigate the significant water consumption associated with AI data centers. One promising approach is the adoption of advanced cooling technologies. For instance, liquid immersion cooling submerges servers in non-conductive liquids, effectively dissipating heat without the extensive water use typical of traditional cooling methods. Companies like OVHcloud have implemented such systems, achieving notable reductions in water and energy consumption.

There’s a growing emphasis on recycling and reusing water within data center operations. By treating and re-circulating water, facilities can significantly decrease their freshwater withdrawals. For example, Amazon Web Services (AWS) has invested in on-site water treatment facilities, allowing for multiple reuse cycles and reducing overall water intake.

Strategic geographical placement of data centers plays a crucial role. Establishing facilities in cooler climates or regions with abundant water resources can naturally alleviate the need for intensive cooling, thereby conserving water. By integrating these innovations, the tech industry aims to balance the burgeoning demands of AI with sustainable environmental practices.

The Role of Policy and Regulation in Managing AI's Environmental Impact

As AI technologies advance, the environmental ramifications, particularly concerning water usage, have garnered attention from policymakers and regulatory bodies. In regions like Virginia, USA, where data centers are prevalent, legislation is being introduced to mandate data centers to report their water consumption, addressing community concerns over resource depletion. Similarly, in the UK, discussions are underway about the placement of AI growth zones near water sources, emphasizing the need for sustainable development practices. These regulatory measures aim to balance technological innovation with environmental stewardship, ensuring that AI’s growth doesn’t come at the expense of vital natural resources. Furthermore, there’s a push for greater transparency from tech companies regarding their environmental footprints, advocating for standardized reporting on water and energy usage. Such policies not only promote accountability but also encourage the adoption of sustainable practices within the industry. As the dialogue between technology developers and regulators continues, it’s imperative to establish frameworks that prioritize both innovation and ecological responsibility.

Tech Giants Respond—What Are Microsoft, Google, and OpenAI Doing?

Big tech companies like Microsoft, Google, and OpenAI are finally addressing the backlash over AI’s hidden water usage. And it’s not just lip service. After studies revealed that training GPT-3 used enough water to fill a small swimming pool, Microsoft and OpenAI started publicly discussing ways to reduce their water footprint.

Microsoft, for example, has pledged to become “water positive” by 2030—meaning it will replenish more water than it consumes. They’re investing in water restoration projects and experimenting with new cooling technologies for their Azure data centers. Google, on the other hand, is taking a location-specific approach—using seawater cooling in Finland and recycled wastewater in some U.S. facilities. It’s a more practical response that looks at climate and water availability on a case-by-case basis.

Meanwhile, OpenAI has stayed relatively quiet about the water stats but is collaborating with Microsoft to improve efficiency in future models like GPT-5. These companies know they’re under a microscope—and users want answers.

How Users Like You Contribute to AI's Water Use Without Realizing It

It might sound wild, but yes—your simple questions to ChatGPT contribute to water usage. Every time you type and hit enter, servers somewhere are working hard to generate a reply. Those servers heat up, and cooling them down uses electricity and water—often more than you’d guess. A single ChatGPT conversation might not seem like a big deal, but multiply that by millions of users daily, and the numbers start to add up fast.

Some estimates say that just 20–50 prompts to ChatGPT could indirectly consume half a liter of clean water. And most of us don’t even realize it because there’s no visual clue or cost directly shown to us. It’s not your fault—tech companies rarely communicate this part of the equation.

Still, awareness matters. Knowing that your AI use has a physical cost might lead to more intentional use. Just like leaving a tap running isn’t ideal, spamming AI prompts without a purpose adds to the hidden environmental toll.

What Can We Do to Reduce AI’s Water Footprint? Practical Tips for Users

Reducing AI’s water footprint isn’t just the job of tech companies—users play a part too. You can help by using AI more thoughtfully and efficiently. Instead of firing off endless random questions, take a moment to think about what you really want to know. Consolidate your queries where possible. This reduces unnecessary server load, which in turn lowers energy and water use.

Another way is to support companies and services committed to sustainability. If your AI provider is transparent about their environmental impact and actively works to reduce water consumption, you’re indirectly encouraging better practices.

Finally, stay informed. The more people know about AI’s water footprint, the more pressure there will be on the industry to innovate responsibly. Sharing articles and talking about these hidden costs sparks conversations that tech companies cannot ignore.

Simple changes in how we interact with AI might seem small, but combined, they create a meaningful difference in conserving precious water resources.

The Future of AI: Balancing Innovation and Environmental Responsibility

The future of AI hinges on a delicate balance: pushing technological boundaries while protecting the planet’s resources. Water is crucial for cooling data centers, and as AI models grow larger and more complex, their environmental footprint grows too. But this doesn’t mean AI’s future is doomed.

Researchers and engineers are already exploring breakthroughs like AI models optimized for efficiency that require less computation, as well as new cooling techniques that dramatically reduce water use. Governments are stepping in with regulations to hold companies accountable, and users are becoming more conscious of their digital habits.

This shift toward sustainability could redefine the tech industry, making water-efficient AI not just an option but a standard. As consumers, staying informed and demanding transparency ensures that innovation doesn’t come at the cost of critical natural resources.

The story of AI and water is still unfolding — and it’s up to all of us to write the next chapter responsibly.

FAQs

Yes, indirectly. ChatGPT runs on data centers that need water to cool their servers. While a single use doesn’t consume much, millions of daily users add up to significant water usage globally.

AI data centers generate a lot of heat due to intense computations. Water is often used in cooling systems to keep servers from overheating, making water a key resource in running AI technologies.

Yes. Companies like Microsoft and Google are investing in advanced cooling technologies, water recycling, and locating data centers in cooler or water-rich areas to lower their water footprint.

Absolutely. By using AI services thoughtfully and avoiding unnecessary queries, users can help reduce server load and indirectly decrease water and energy consumption.

It could if unchecked. But with growing awareness, innovations in technology, and stronger regulations, the industry aims to balance AI’s growth with sustainable water use.