Google Gemma represents one of the latest strides in artificial intelligence (AI) development— an AI model developed by Google that’s set to redefine the boundaries of machine learning and data processing. This model incorporates cutting-edge technology to provide efficient, accurate, and reliable solutions across various industries. In this article, we delve into the features and capabilities of Google Gemma, exploring its significance in the realm of AI.

Pin

Pin Table of Contents

Understanding Google Gemma

Google Gemma is an AI model that leverages the power of machine learning algorithms to analyze, comprehend, and predict data patterns. The model is designed to be highly adaptable, learning from vast amounts of information to continuously improve its performance. Google Gemma exemplifies the company’s commitment to innovation, offering sophisticated tools to streamline complex tasks.

Features and Capabilities

- Advanced Machine Learning: Google Gemma is built upon advanced machine learning frameworks, enabling it to learn from data more efficiently than traditional AI models.

- Natural Language Processing: The model exhibits exceptional natural language processing (NLP) abilities, understanding and interpreting human language with remarkable accuracy.

- Data Analytics: Google Gemma excels in data analytics, providing valuable insights by processing and analyzing large data sets swiftly.

- Versatility: The AI model is versatile across various applications, from healthcare diagnostics to market trend analysis.

- User-Friendly Interface: Despite its complexity, Google Gemma offers a user-friendly interface, simplifying the process of integrating AI into everyday tasks.

Potential Applications

Google Gemma has the potential to transform industries by enhancing decision-making, streamlining operations, and providing personalized user experiences. Some potential applications include:

Healthcare: Providing diagnostic assistance, predicting patient outcomes, and personalizing treatment plans.

Finance: Analyzing market data, identifying investment opportunities, and detecting fraudulent activities.

Retail: Personalizing shopping experiences, managing inventory, and predicting consumer trends.

Customer Service: Powering chatbots to deliver immediate, accurate customer support.

Research and Development: Accelerating the pace of innovation by analyzing research data and predicting trends.

Gemma Surpasses Llama 2 in Benchmark Tests: A New Era of High-Performance Language Models

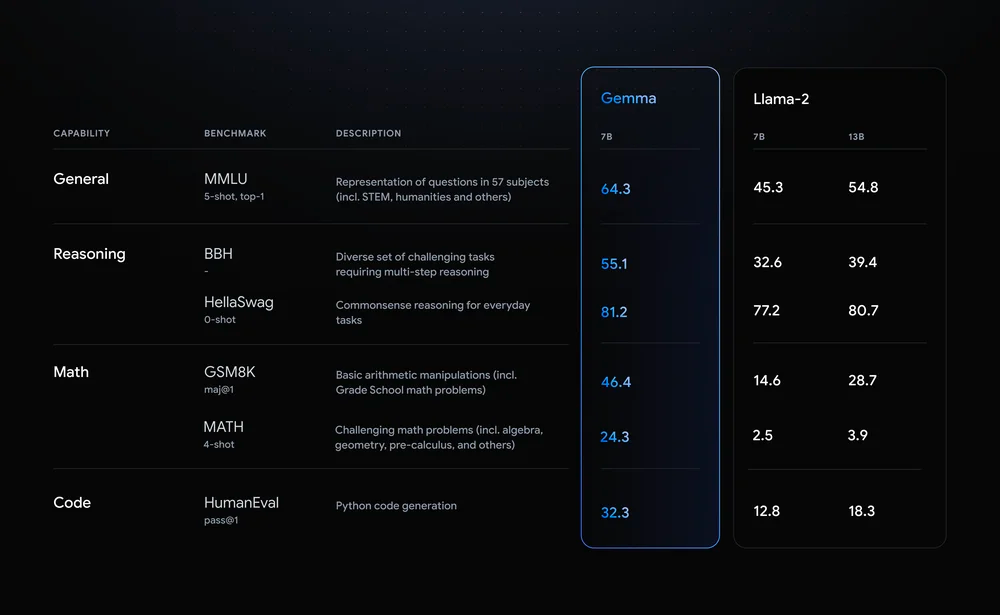

Gemma is an advanced language model designed with two configurations, one equipped with 7 billion parameters and the other with 2 billion. These configurations provide different levels of performance, scaling with the number of parameters—the larger model generally offering more nuanced understanding and generation capabilities.

When evaluated across a series of benchmarks, Gemma demonstrates higher general accuracy than its counterparts, including Meta’s language model, Llama 2. With a reported accuracy rate of 64.3% in its larger configuration, Gemma exceeds the performance of Llama 2 in key areas such as reasoning and mathematics, among others. This illustrates Gemma’s capability to understand and process information in a manner that closely aligns with the correct or expected outputs in these fields.

Gemma’s performance is an indication of the advancements in language model development, showcasing the potential for even more accurate and reliable natural language understanding and generation in AI applications.

Gemma Surpasses Llama 2 in Benchmark Tests: A New Era of High-Performance Language Models

Gemma is an advanced language model designed with two configurations, one equipped with 7 billion parameters and the other with 2 billion. These configurations provide different levels of performance, scaling with the number of parameters—the larger model generally offering more nuanced understanding and generation capabilities.

Pin

Pin Image by: Google

When evaluated across a series of benchmarks, Gemma demonstrates higher general accuracy than its counterparts, including Meta’s language model, Llama 2. With a reported accuracy rate of 64.3% in its larger configuration, Gemma exceeds the performance of Llama 2 in key areas such as reasoning and mathematics, among others. This illustrates Gemma’s capability to understand and process information in a manner that closely aligns with the correct or expected outputs in these fields.

Gemma’s performance is an indication of the advancements in language model development, showcasing the potential for even more accurate and reliable natural language understanding and generation in AI applications.

Features of Gemma LLM

1. Streamlined for Speed: Gemma LLM’s Efficient 2B and 7B Models Revolutionizing PC and Smartphone Capabilities

- Gemma LLM is designed with a compact structure, boasting 2B (billion) and 7B parameter models.

- The smaller size compared to larger models enables Gemma to process information more swiftly.

- This architecture requires less computational power, which makes Gemma suitable for PCs and smartphones.

2. Gemma LLM: Championing Collaboration with Open-Source Accessibility in AI

- In a domain often marked by proprietary technology, Gemma stands out as it is open-source.

- Developers and researchers can freely access Gemma’s coding framework and parameters.

- The open-source nature encourages modification and improvement by the tech community.

3. Task-Mastered Tuning: Gemma’s Specialized Variants Elevate AI Precision in Targeted Applications

- Gemma extends its functionality with specialized versions tailored for particular tasks.

- These versions are fine-tuned for activities such as question-answer sessions and summarizing content.

- The optimization enhances how Gemma manages these specific tasks, improving user experience in practical scenarios.

Gemma LLM’s significance stretches far beyond its technical specifications. It represents a step towards widespread access to sophisticated language models, a feature that can lead to increased innovation and collaboration. The availability of Gemma as an open-source tool can potentially spark a surge in AI-based solutions and applications. Imagine developers creating more intuitive personal assistants, or researchers devising algorithms for advanced code automation — the possibilities are immense.

The inclusivity offered by Gemma allows a broader range of individuals and organizations to partake in the AI revolution. From crafting enhanced chatbots that can carry human-like conversations to assisting scientists in parsing through voluminous research data efficiently, Gemma’s applications are vast. This model is not just an AI tool but a catalyst for progress in natural language processing, potentially accelerating advancements and influencing the trajectory of AI development. By reducing the hurdles for entry into the world of LLMs, Gemma LLM could well be instrumental in shaping the future landscape of artificial intelligence.

Diving Deep into Gemma Variants

Gemma, a prominent member of Google’s LLM collection, stands out with its suite of versatile models—each tailored to specific developer needs and application scenarios. Let’s unfold the layers of Gemma to understand which variant might align best with your objectives.

Optimizing Performance: Matching Your Needs with the Right Gemma Model Size —

2B Gemma: Lean yet Mighty

Strengths: If your environment demands resource-conservation, the 2B Gemma is the model of choice. Engineered for efficiency, it has a modest appetite for computational power.

Use Cases: Given its compact design, the 2B is a wizard at text classification and straightforward Q&A tasks. This makes it an ally for chatbots and lightweight analytics.

Technical Details: It boasts a memory footprint close to 1.5GB, which is small enough for CPUs and even mobile devices. You’ll admire its sprightly inference pace—a boon for real-time applications.

7B Gemma: The Equilibrium Model

Strengths: When the 2B’s vigor falls short, the 7B steps in, harnessing more horsepower while still keeping an eye on the efficiency gauge.

Use Cases: This middleweight contender is cut out for summarization and code generation, among other sophisticated endeavors.

Technical Details: It’s a tad more demanding, requiring about 5GB of memory, but it’s well within reach for the typical GPUs and TPUs in today’s market.

In selecting from the Gemma pantheon, balance your compute resources with your ambition’s magnitude, and these models will not disappoint.

Base vs. Instruction-Tuned AI Models: Balancing Flexibility and Specialization

Base AI Models

General-Purpose Foundation: Base models are developed to have a broad understanding of language and knowledge. They can handle a variety of tasks because they are not pre-tuned for specific functions.

Need for Fine-Tuning: To achieve the best performance on a particular task, base models often require additional training. This fine-tuning process adjusts the model’s parameters to align with the specific nuances and requirements of the task at hand.

Customization Flexibility: With base models, there is significant flexibility to customize the AI to suit unique tasks. This makes them particularly suitable for innovative applications or when a specialized task is not covered by existing pre-trained models.

Instruction-Tuned AI Models

Pre-Trained on Specific Instructions: Instruction-tuned models are further trained on tasks like summarization, translation, or question answering, which means they are ‘taught’ to follow specific instructions effectively.

Immediate Usability for Targeted Tasks: Because they are pre-tuned, these models can be deployed quickly for their specialized tasks without additional training, providing immediate functionality right out of the box.

Sacrificed Generalizability: The trade-off with these models is that while they perform better on tasks they were tuned for, they may not adapt as well to tasks outside of their training scope. The instruction-tuned model’s performance on general tasks may be less optimal compared to base models.

Comparative Memory Footprint Analysis: RAM Requirements for 2B and 7B AI Models Pre and Post Fine-Tuning

- 2B Model Requirements: Approximately 1.5GB of RAM is needed to load a 2B model into memory. This measurement is before any additional memory overhead imposed by running deep learning frameworks or the operating system itself.

- 7B Model Requirements: A larger 7B model will command roughly 5GB of RAM for the same process.

- Fine-Tuning Impact: The act of fine-tuning these models on specific tasks or datasets may lead to a slight increase in the required memory footprint.

Inference Velocity Breakdown: Response Efficacy of 2B vs. 7B AI Models

- 2B Models: They stand out for their inference speed, processing inputs and producing outputs swiftly enough to be integrated into applications where response time is critical.

- 7B Models: While these larger models are indeed slower relative to 2B models, they provide a middle ground in speed that is faster than much larger language models, balancing performance and size.

Cross-Platform Flexibility: TensorFlow, PyTorch, and JAX Compatibility with 2B and 7B AI Models

- TensorFlow Compatibility: Both 2B and 7B models can be utilized within the TensorFlow ecosystem, allowing for integration with one of the most popular machine learning libraries.

- PyTorch Compatibility: PyTorch users can also employ these model sizes, which supports dynamic computation graphs favored by many research and development teams.

- JAX Compatibility: JAX is designed for high-performance machine learning research. The compatibility of both model sizes with JAX is particularly beneficial for projects aiming to leverage automatic differentiation and GPU/TPU acceleration.

For developers, the choice between a 2B and 7B model is a balance of computational resources and application needs. Smaller models enable rapid iteration and serve well in latency-sensitive scenarios, while larger models excel in complex tasks where the depth of understanding may be paramount. Framework flexibility ensures that developers can work within their environment of choice without concern for model integration issues.

Unlocking Gemma's AI Potential: A Guide to Customizable LLM Deployment and Integration

1. Understanding Gemma:

- Gemma is a part of Google’s collection of open-source large language models (LLMs).

- It is designed to enable developers and researchers to harness AI capabilities.

2. Platform Flexibility:

- Gemma is compatible with various hardware including CPUs, GPUs, and TPUs.

- For CPUs, use the Hugging Face Transformers library or Google’s TensorFlow Lite.

- For enhanced performance with GPUs/TPUs, opt for the full TensorFlow suite.

3. Running Gemma:

- Choose the appropriate hardware setup based on availability and performance needs.

- Install the necessary libraries and dependencies tailored to your hardware choice.

4. Using Cloud Services:

- Google Cloud Vertex AI is recommended for cloud-based usage.

- It offers ease of integration and scales according to your project requirements.

5. Accessing Pre-trained Models:

- Gemma offers a range of pre-trained models like Gemma 2B and 7B.

- These models cater to tasks such as text generation, translation, and question-answering.

6. Instruction-Tuned Models:

- Gemma also provides instruction-tuned variants like 2B-FT and 7B-FT.

- These models are designed for fine-tuning with your dataset for enhanced personalization.

7. Building Applications:

- Utilize Gemma’s pre-trained models to build custom AI applications.

- Integrate your own data for models that reflect unique nuances and requirements.

8. Getting Started:

- Begin by setting up a development environment that can handle LLM workloads.

- Install Gemma on your chosen platform following the specific guidelines for CPUs, GPUs, or TPUs.

- Explore Gemma’s repository and documentation for specialized use cases.

By following these steps, you can head-start your journey with Gemma to create tailored AI-driven solutions.

Building Tomorrow: Exciting Applications Powered by Gemma

1. Crafting Dynamic Story-worlds with Advanced Text Generation:

- Utilize sophisticated text generation algorithms to create immersive stories.

- Tailor narratives to specific genres, themes, or audiences.

- Infuse storytelling with compelling characters and dynamic plotlines.

- Allow for interactive storytelling, where readers influence the story’s outcome.

2. Language Translation Made Easy:

- Access Gemma’s extensive language database for high-quality translations.

- Achieve near-instantaneous conversion between multiple languages.

- Maintain context and nuance across translations to enhance readability.

- Simplify communication in multilingual environments, such as global businesses or diverse online communities.

3. Gemma’s Translation Revolution: Bridging Languages with Ease and Nuance:

- Employ question-answering frameworks to provide accurate information.

- Integrate with databases and the internet to source the latest data.

- Support educational platforms with instant, reliable answers to academic queries.

- Enhance customer support by resolving questions with precise, informed responses.

4. Igniting Creative Collaboration with Gemma’s Content Generation:

- Innovate in poetry and prose creation, offering fresh, AI-generated compositions.

- Facilitate scriptwriting for plays, movies, or video games with automated brainstorming tools.

- Pioneer in code generation by assisting developers in writing and debugging software.

- Explore the production of creative media, like music lyrics or visual art descriptions, through AI collaboration.

Gemma's Ascent: Shaping the Future of Language Learning Models Through Open Collaboration and Innovation

1. Burgeoning Growth in LLM Domain

- Gemma, with its open-source model, is at the forefront of attention.

- Its performance generates excitement across the Language Model (LLM) community.

2. Prospects for Gemma

- Anticipation of what the future holds for this innovative series of models is high.

3. Collaboration and Accelerated Innovation

- Gemma’s open nature encourages worldwide partnerships, enhancing the model.

- Contributions from a global pool of researchers improve model capabilities.

4. Developmental Leap in Interpretability and Fairness

- Efforts are on to enhance Gemma’s understanding and unbiased decision-making.

- Strides made to ensure outcomes are transparent and equitable.

5. Efficiency Enhancements

- A concerted focus on making Gemma more energy and computationally efficient.

- A path to a more sustainable and quicker LLM is in the works.

6. Exploration into Multi-modality

- Gemma is expected to push into multi-modal frontiers.

- Anticipate abilities beyond textual analysis to include images, audio, and video comprehension.

7. Democratization of AI

- Gemma’s approach aims to break barriers and democratize AI.

- Its capabilities aspire to serve a broad spectrum of users equitably.

8. Inclusivity and Accessibility

- There’s an optimistic future where AI, represented by Gemma, is accessible to all.

- Gemma signifies movement towards inclusive technology.

9. Groundbreaking Applications on the Horizon

- Innovative uses of Gemma likely to surface as the model evolves.

- Diverse fields will potentially benefit from Gemma’s capabilities.

10. Sustainable and Continuous Growth

- Open-source ensures that Gemma will keep growing through community involvement.

- The model stands as a dynamic entity, likely to adapt to changing technological landscapes.

11. Impactful Presence in LLM Space

- Gemma is poised to have a lasting influence on the trajectory of future LLMs.

- The model’s impact is projected to be both profound and far-reaching in the realm of AI.

Gemma’s future appears to be one marked by robust collaborative growth, diverse application possibilities, and a steadfast commitment to improving the accessibility and functionality of Language Learning Models.

Conclusion

Google Gemma stands as a testament to Google’s ingenuity in the field of artificial intelligence. Its ability to handle complex tasks with precision and adaptability makes it a valuable asset for businesses and individuals alike. As AI continues to evolve, Google Gemma will likely play a significant role in shaping the future of technology, offering solutions that were once thought impossible.

Key Takeaways

- Accessibility for All: Gemma’s sleek design and open-source status ensure it’s easy to use on various platforms, widening the accessibility for users ranging from solo developers to small businesses.

- Tailored Intelligence: Offering a spectrum of sizes and specialized tuning, Gemma meets a multitude of demands, enabling everything from simple Q&A to intricate creative tasks.

- Customization is Key: With fine-tuning options, Gemma can be precisely adapted to individual requirements, paving the way for groundbreaking applications across different industries.

- Community-Driven Growth: The community around Gemma is its heartbeat, working together to push the boundaries in understanding AI decisions, ethical AI use, and expanding into multi-modal functions.

FAQs

Gemma LLM’s efficiency lies in its compact structure, featuring models with 2 billion and 7 billion parameters. These streamlined models allow for faster data processing and require less computational power, making them well-suited for PCs and smartphones.

Although smaller in parameter count compared to some large-scale models, Gemma remains competitive due to its speed and efficiency. This makes it an attractive choice for platforms with limited computing resources.

Gemma LLM differentiates itself by allowing full access to its code framework and parameters. This encourages tech enthusiasts, developers, and researchers to collaborate and innovate within the AI field.

Being open-source, Gemma allows and promotes modification and enhancement from the tech community. This collaborative environment can lead to rapid advances in AI development and application.

Gemma LLM offers specialized variants designed for specific tasks like question-answering systems or summarization tasks, providing users with precision-tuned tools for enhanced performance in these areas.

These specialized models are optimized for particular functions, which means Gemma can handle such tasks with greater accuracy and relevance, thereby offering a better user experience for targeted applications.